Upload files to SharePoint using OData!

I posted yesterday about some pain I felt when working with SharePoint and the OData API, to balance the story this post cover some pleasure of working with it – that being uploading a file to document library using OData!

This is really easy to do, once you know how – but it’s the learning curve of Everest here which makes this really hard to get right, as you have both OData specialisations and SharePoint quirks to contend with. The requirements before we start is we need a file (as a stream), we need to know it’s filename, we need it’s content type and we need to know where it will go.

For this post I am posting to a document library called Demo (which is why OData generated the name of DemoItem) and the item is a text file called Lorem ipsum.txt. I know it is a text file, which means I also know it’s Content Type is plain/text.

The code, below, is really simple and here are what is going on:

- Line 1: I am opening the file using the System.IO.File class, this gives me the stream I need.

- Line 3: To communicate with the OData service I use the DataContext class which was generated when I added the service reference to the OData service and passed in the URI to the OData service.

- Line 8: Here I create a DemoItem - remember in SharePoint everything is a list or a list item, even a document which means I need to create the item first. I set the properties of the item over the next few lines. It is vital you set these and set them correctly or it will fail.

- Line 16: I add the item to the context, this means that it is being tracked now locally – it is not in SharePoint yet. It is vital that this be done prior to you associating the stream.

- Line 18: I associate the stream of the file to the item. Once again, this is still only happening locally – SharePoint has not been touched yet.

- Line 20: SaveChanges handles the actual writing to SharePoint.

using (FileStream file = File.Open(@"C:\Users\Robert MacLean\Documents\Lorem ipsum.txt", FileMode.Open))

{

DataContext sharePoint = new DataContext(new Uri("http://<sharepoint>/sites/ATC/_vti_bin/listdata.svc"));

string path = "/sites/ATC/Demo/Lorem ipsum.txt";

string contentType = "plain/text";

DemoItem documentItem = new DemoItem()

{

ContentType = contentType,

Name = "Lorem ipsum",

Path = path,

Title = "Lorem ipsum"

};

sharePoint.AddToDemo(documentItem);

sharePoint.SetSaveStream(documentItem, file, false, contentType, path);

sharePoint.SaveChanges();

}

Path Property

The path property which is set on the item (line 12) and when I associate the stream (line 18, final parameter) is vital. This must be the path to where the file will exist on the server. This is the relative path to the file regardless of what SharePoint site you are in for example:

- Path: /Documents/demo.txt

- Server: http://sharepoint1

- Site: /

- Document Library: Documents

- Filename: demo.txt

- Path: /hrDept/CVs/abc.docx

- Server: http://sharepoint1

- Site: /hrDept

- Document Library: CVs

- Filename: abc.docx

Wrap-up

I still think you need to still look at WebDav as a viable way to handle documents that do not have metadata requirements, but if you have metadata requirements this is a great alternative to the standard web services.

Cannot add a Service Reference to SharePoint 2010 OData!

SharePoint 2010 has a number of API’s (an API is a way we communicate with SharePoint), some we have had for a while like the web services but one is new – OData. What is OData?

The Open Data Protocol (OData) is a Webprotocol for querying and updating data that provides a way tounlock your data and free it from silos that exist in applicationstoday. OData does this by applying and building upon Webtechnologies such as HTTP, Atom PublishingProtocol (AtomPub) and JSON toprovide access to information from a variety of applications,services, and stores.

The main reason I like OData over the web services is that it is lightweight, works well in Visual Studio and works easily across platform, thanks to all the SDK’s.

SharePoint 2010 exposes these on the following URL http(s)://<site>/_vti_bin/listdata.svc and you can add this to Visual Studio to consume using the exact same as a web service to SharePoint, right click on the project and select Add Service Reference.

SharePoint 2010 exposes these on the following URL http(s)://<site>/_vti_bin/listdata.svc and you can add this to Visual Studio to consume using the exact same as a web service to SharePoint, right click on the project and select Add Service Reference.

Once loaded, each list is a contract and listed on the left and to add it to code, you just hit OK and start using it.

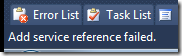

Add Service Reference Failed

The procedure above works well, until it doesn’t and oddly enough my current work found a situation which one which caused the add reference to fail! The experience isn’t great when it does fail – the Add dialog closes and pops back up blank! Try it again and it disappears again but stays away.

The procedure above works well, until it doesn’t and oddly enough my current work found a situation which one which caused the add reference to fail! The experience isn’t great when it does fail – the Add dialog closes and pops back up blank! Try it again and it disappears again but stays away.

If you check the status bar in VS, you will see the error message indicating it has failed – but by this point you may see the service reference is listed there but no code works, because the adding failed.

If you check the status bar in VS, you will see the error message indicating it has failed – but by this point you may see the service reference is listed there but no code works, because the adding failed.

If you right click and say delete, it will also refuse to delete because the adding failed. The only way to get rid of it is to close Visual Studio, go to the service reference folder (<Solution Folder>\<Project Folder>\Service References) and delete the folder in there which matches the name of your service. You will now be able to launch Visual Studio again, and will be able to delete the service reference.

What went wrong?

Since we have no way to know what went wrong, we need to get a lot more low level. We start off by launching a web browser and going to the meta data URL for the service: http(s)://<site>/_vti_bin/listdata.svc/$metadata

Since we have no way to know what went wrong, we need to get a lot more low level. We start off by launching a web browser and going to the meta data URL for the service: http(s)://<site>/_vti_bin/listdata.svc/$metadata

In Internet Explorer 9 this just gives a useless blank page ![]() but if you use the right click menu option in IE 9, View Source, it will show you the XML in notepad. This XML is what Visual Studio is taking, trying to parse and failing on. For us to diagnose the cause we need to work with this XML, so save it to your machine and save it with a .csdl file extension. We need this special extension for the next tool we will use which refuses to work with files without it.

but if you use the right click menu option in IE 9, View Source, it will show you the XML in notepad. This XML is what Visual Studio is taking, trying to parse and failing on. For us to diagnose the cause we need to work with this XML, so save it to your machine and save it with a .csdl file extension. We need this special extension for the next tool we will use which refuses to work with files without it.

The next step is to open the Visual Studio Command Prompt and navigate to where you saved the CSDL file. We will use a command line tool called DataSvcUtil.exe. This may be familiar to WCF people who know SvcUtil.exe which is very similar, but this one is specifically for OData services. All it does is take the CSDL file and produce a code contract from it, the syntax is very easy: datasvcutil.exe /out:<file.cs> /in:<file.csdl>

The next step is to open the Visual Studio Command Prompt and navigate to where you saved the CSDL file. We will use a command line tool called DataSvcUtil.exe. This may be familiar to WCF people who know SvcUtil.exe which is very similar, but this one is specifically for OData services. All it does is take the CSDL file and produce a code contract from it, the syntax is very easy: datasvcutil.exe /out:<file.cs> /in:<file.csdl>

Immediately you will see a mass of red, and you know that red means error. In my case I have a list called 1 History which in the OData service is known by it’s gangster name _1History. This problem child is breaking my ability to generate code, which you can figure out by reading the errors.

Solving the problem!

Thankfully I do not need 1 History, so to fix this issue I need to clean up the CSDL file of _1History references. I switched to Visual Studio and loaded the CSDL file in it and begin to start removing all references to the troublemaker. I also needed to remove the item contract for the list which is __1HistoryItem. I start off by removing the item contract EntityType which is highlighted in the image along side.

Thankfully I do not need 1 History, so to fix this issue I need to clean up the CSDL file of _1History references. I switched to Visual Studio and loaded the CSDL file in it and begin to start removing all references to the troublemaker. I also needed to remove the item contract for the list which is __1HistoryItem. I start off by removing the item contract EntityType which is highlighted in the image along side.

The next cleanup step is to remove all the associations to __1HistoryItem.

![]() Finally the last item I need to remove is the EntitySet for the list:

Finally the last item I need to remove is the EntitySet for the list:

BREATH! RELAX!

Ok, now the hard work is done and so I jump back to the command prompt and re-run the DataSvcUtil tool, and it now works:

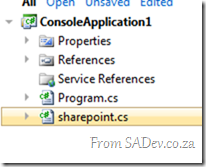

This produces a file, in my case sharepoint.cs, which I am able to add that to my project just as any other class file and I am able to make use of OData in my solution just like it is supposed to work!

This produces a file, in my case sharepoint.cs, which I am able to add that to my project just as any other class file and I am able to make use of OData in my solution just like it is supposed to work!

Come and hang out with me at Tech·Ed Africa 2010!

Tech·Ed Africa 2010 is less than a month away and it is a massive conference where all things IT Pro and developer are discussed. It is a great conference, filled with networking (read: beer), wonderful sessions and great prizes!

Tech·Ed Africa 2010 is less than a month away and it is a massive conference where all things IT Pro and developer are discussed. It is a great conference, filled with networking (read: beer), wonderful sessions and great prizes!

I will be attending as a speaker again this year so I thought I would share what I will be talking about, however before I get to that I want to talk about a wonderful prize I am giving away.

Certain MVP’s got given some Visual Studio 2010 Ultimate licenses to give away, and I was lucky enough to get three of those. Each license is values at $11600!! I will be giving these away at the community lounge as part of a fun game! Details of the game will be available at the lounge at the event.

Back to my shameless promotion ![]() Tech·Ed has two types of sessions Breakout Sessions where I get up on stage and present and demo for about an hour and Whiteboard Sessions which are interactive discussion sessions. I am lucky to have a few of each. Note: this is all subject to change.

Tech·Ed has two types of sessions Breakout Sessions where I get up on stage and present and demo for about an hour and Whiteboard Sessions which are interactive discussion sessions. I am lucky to have a few of each. Note: this is all subject to change.

Breakout Sessions

Intro to Workflow Services and Windows Server AppFabric

Windows Workflow Foundation 4 (WF4) provides a flexible, declarative programming model and a brand new runtime architecture that makes it easily accessible for .NET developers. What that means for developers is that WF4 can make it easier to put together your application logic, encapsulate complex control flow logic, and abstract complex programming tasks. WF4 also composes nicely with Windows Communication Foundation (WCF) for writing declarative workflow services that support content-based message correlation and long-running duplex conversations. When you combine the framework features with the new AppFabric capabilities in Windows Server to host and manage your workflows and services , you have a comprehensive workflow solution in Windows. In this session we will look at examples of how you can use WF4 in your application and service development to speed your development and simplify complex tasks, as well as how to build powerful, manageable workflow services with WF, WCF and AppFabric. Come find out how this powerful, testable framework can help you and your development team take programming to the next level.

WCF Made Easy with Microsoft .NET Framework 4 and Windows Server AppFabric

Windows Communication Foundation (WCF) is a flexible and powerful platform for building service-oriented applications, and with that flexibility comes some complexity. As of .NET Framework 4 – configuring, securing, hosting and managing WCF services has never been easier! WCF 4 and Windows Server AppFabric come together to help developers and IT administrators overcome the complexity. Come find out how much easier it is to configure WCF services in .NET 4 including alignment with the Microsoft ASP.NET configuration model and a reduced configuration footprint. Also learn Windows Server AppFabric features for the IT administrator, finally making it easier for IT administrators to easily access settings they care about such as security and throttling features; providing control over the hosting lifecycle of WCF services; and giving new visibility into faults, exceptions, and tracing and diagnostics features to help you manage your service deployments in production un-intrusively.

Windows Server AppFabric Caching: What It Is and When You Should Use It

The distributed in-memory caching capabilities of Windows Server AppFabric will change how you think about scaling your Microsoft .NET-connected applications. Come learn how the distributed nature of the cache allows large amounts of data to be stored in-memory for extremely fast access, how AppFabric’s integration with Microsoft ASP.NET makes it easy to add low-latency data caching across the Web farm, and discover the unique high availability features of AppFabric which will bring new degrees of scale to your data tier.

Whiteboard Sessions

Web Service Interop

This is a panel discussion on web service interop with myself, Nabeel Prior (Microsoft BizTalk Expert), Anton Delsink and Ryan Crawcour (BizTalk Expert from New Zealand).

Powering Rich Internet Applications: Windows Server AppFabric, Web Services, and Microsoft Silverlight

Visual Studio 2010 Training in Jo'burg

Notion Solutions will be running some Visual Studio 2010 training in Johannesburg at the end of October. These are not free courses but the value you will get from training with one of the worlds top ALM companies will be worth it.

Tester Training with Visual Studio 2010 Ultimate (4-Day Course)

This course provides students with the knowledge and skills to use the latest testing tools provided by Visual Studio 2010 to improve their ability to manage and execute test plans. Test case creation and management will be covered, as well as test execution and automation practices using Test Manager. Creating and managing virtual lab environments using Lab Management 2010 will be discussed within the context of test planning and execution. By the end of the course, students are equipped to begin planning the implementation of Visual Studio 2010 for improving testing practices within their organizations.

October 25 – October 28, 2010 09:00 – 17:00

Overview of Visual Studio 2010 (4-Day Course)

The Visual Studio 2010 Overview course provides students with the knowledge and skills to improve the development practices of their entire organization and team. A broad set of features provided with Visual Studio 2010 will be covered to assist your team with application design, test management and execution, development standards and collaboration, automated build and release management, database schema management and test lab management. This course covers all of the most important features without going overly deep. By the end of the course, students are equipped to better understand how Visual Studio 2010 can be used within their organizations.

Pulled Apart - Part XIII: IMPF revised, again.

Note: This is part of a series, you can find the rest of the parts in the series index.

Pull started as a learning exercise and so I didn’t feel bad about using a new technology for a component which may not be the best choice. IMPF was one such are where I decided to use a new technology, however it hasn’t been smooth sailing – but I persisted as it was important for me to learn.

I was working on some enhancements and bug fixes recently and I ended having to put yet another hack into IMPF to handle the technology behind the scenes. In this case a Thread.Sleep to have a partial delay between when things are written and when they are read. This was a wake up bell for me, because it felt dirty and stupid. So what did I do? I decided the best course of action was to step back and think what is the best way to do this – and so I ripped all the MemoryMappedFile file out and the Win32 API stuff (about 200 lines of code) and replaced it with a WCF service/client implementation.

To do that I needed the contract, which is about as simple as it can get:

[ServiceContract]

interface IDataService

{

[OperationContract]

void SendMessage(string message);

}

Next I needed the implementation, which is also very simple in a large part thanks to the bus.

class DataService : IDataService

{

public void SendMessage(string message)

{

IBus bus = Factory.Instance.Resolve<IBus>();

if (!string.IsNullOrWhiteSpace(message))

{

if (message[0] == '!')

{

switch (message)

{

case "!playunplayed":

{

bus.Broadcast(DataAction.LaunchUnplayedEpisode);

break;

}

case "!forcerefresh":

{

bus.Broadcast(DataAction.UpdateFeeds);

break;

}

}

}

else

{

// its a feed

bus.Broadcast(DataAction.ParseFeed, message);

}

}

}

}

You may note some command parameter items above, this is for new features I am working on.

Finally I needed a way to create the WCF service (the server) and a client to talk to it. I am using .NET 4 which means I get access to the great AddDefaultEndpoints method which makes this really simple. It figures out everything it needs for a default configuration from the URI that is passed. In my case I pass in a net.pipe URI so it sets up a named pipe for me.

public IPMF(string instance)

{

host = new ServiceHost(typeof(DataService), new Uri(InstanceURL(instance)));

host.AddDefaultEndpoints();

host.Open();

}

Lastly sending a message to the named pipe is also very simple. You’ll note that I am using a ChannelFactory here and not an actual implementation. This is because I did not add a service reference, as the application has all the information it needs internally there is no need for additional code to be written.

public static void SendMessageToServer(string instance, string message)

{

IDataService dataService = ChannelFactory<IDataService>.CreateChannel(new NetNamedPipeBinding(), new EndpointAddress(InstanceURL(instance)));

dataService.SendMessage(message);

}

Final Thoughts

This is the correct way to do this, and it is what you should be using in real systems not only because it is so much less code but also because it works perfectly – no need to insane hacks.

My learning with IMPF was not only about the exceptionally powerful MemoryMappedFile in .NET 4 but also when it should and shouldn’t be used.

Making Money with CodedUI

Saturday was Microsoft’s Dev4Dev’s event, where each presenter gets 20min to cover one topic. It is fantastic fun and a great way to learn.

For the event I decided to tackle CodedUI, which is just a great testing technology and in 20min I showed off a number of features using it. Below is the slides, which are not valuable - unless you jump to the hidden content where you find my demo script and some extra information!

If you are wanting to play with the demo’s you will also need my pre-constructed demo bits:

- The Xbox Super Store website: http://xbox360superstore.codeplex.com/

- My WPF application: Below

- The XML file with the values for the Data Driven test: Below

For those who attended and saw my second demo not go according to plan, I apologise again ![]() I’ve since run it again and it works every time, I guess the massive audience scared CodedUI into breaking

I’ve since run it again and it works every time, I guess the massive audience scared CodedUI into breaking ![]()

Pulled Apart - Part XII: Parsing feeds (ATOM & RSS) in .NET

Note: This is part of a series, you can find the rest of the parts in the series index.

I’ve mentioned that a podcatcher is really just two things put together, a download manager and a feed parser. Feed parsing is not the easiest item to build, just look at my attempt many years ago to build a Delphi RSS parser called SimpleRSS – it works well, but there are many edge cases which can kill it.

The key things that trip you up when writing a parser is are:

- RSS and ATOM – There is two major formats for feeds, RSS and ATOM which are very different.

- Versioning – RSS and ATOM both have a number of versions which requires completely different parsing going on.

- Errors – It is easy to produce these, it’s just XML, and so there is a lot of feeds which do not validate.

With that in mind I am really happy that the .NET Framework (since 3.5), includes it’s own parser for feeds: SyndicationFeed.

SyndicationFeed

System.ServiceModel.SyndicationFeed supports both ATOM (version 1.0) and RSS (version 2.0) and to use it you need to add a reference to System.ServiceModel.dll. It only handles the parsing, and creation although I don’t care about that functionality in Pull, of feeds. To parse the feed you parse in a XmlReader to the Load method and it takes care of the parsing.

using (XmlReader reader = XmlReader.Create(podcastUrl))

{

return SyndicationFeed.Load(reader);

}

That really is as complex as this gets Pulled Apart - Part XI: Talking to yourself is ok, but answering back is a problem. Why IMPF destroyed CPUS?

Note: This is part of a series, you can find the rest of the parts in the series index.

Pull for me is as much about learning as it is about writing a useful tool that others will enjoy and often I head down a path to learn it was wrong. Sometimes I realise before a commit and no one ever knows, other times it is committed and reading the source history is like an example of how bad things can get and sometimes I even ship bad ideas. IMPF is one such area where I shipped a huge mistake which caused Pull to easily consume an entire core of CPU for doing zero work.

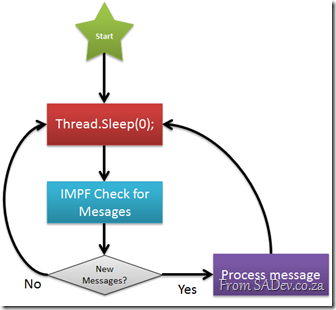

IMPF would check for messages as using the following process:

The Thread.Sleep(0) is there to ensure application messages get processed, but it is zero so that as soon as a message arrives it is processed. This meant that the check, which did a lot of work, was running almost constantly. This means that Pull ended up eating 100% of a the power of a core ![]()

The Solution

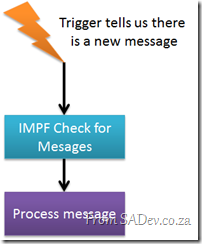

The solution to this was to change the process from constantly checking to getting notified when there is a new message.

This is also much simpler to draw than the other way, maybe that should be a design check, the harder to draw the less chance it works ![]()

The only issue is how do I cause that trigger to fire from another application when it writes a message IMPF should read?

Windows Messaging

Windows internally has a full message system which you can use to send messages to various components in Windows, for example to turn the screen saver on or off, or to send messages to applications. I have used this previously in Pull to tell Windows to add the shield icon if needed (see Part IX) to the protocol handler buttons.

I can also use it to ping an application with a custom message which that application can act on. For Pull when I get that ping I know there is a new IMPF message.

The first part of this is finding the window handle of the primary instance that I want to ping. This I do by consulting the processes running on the machine and using a dash of LINQ filter it to the primary instance.

private static IntPtr GetWindowHandleForPreviousInstances()

{

Process currentProcess = Process.GetCurrentProcess();

string processName = currentProcess.ProcessName;

List<Process> processes = new List<Process>(Process.GetProcessesByName(processName));

IEnumerable<Process> matchedProcesses = from p in processes

where (p.Id != currentProcess.Id) &&

(p.ProcessName == processName)

select p;

if (matchedProcesses.Any())

{

return matchedProcesses.First().MainWindowHandle;

}

return IntPtr.Zero;

}

Now I know who to ping, I just need to send a ping. This is done by calling the Win32 API SendNotifyMessage:

public static int NotifyMessageID = 93956;

private static class NativeMethods

{

[DllImport("user32.dll", SetLastError = true, CharSet = CharSet.Auto)]

[return:MarshalAs(UnmanagedType.Bool)]

public static extern bool SendNotifyMessage(IntPtr hWnd, int Msg, IntPtr wParam, IntPtr lParam);

}

public static void PingPreviousInstance()

{

IntPtr otherInstance = GetWindowHandleForPreviousInstances();

if (otherInstance != IntPtr.Zero)

{

NativeMethods.SendNotifyMessage(otherInstance, NotifyMessageID, IntPtr.Zero, IntPtr.Zero);

}

}

That takes care of sending, but how do I receive the ping? I need to do is override the WndProc method on my main form to check for the message and if I get the right ID (see line 1 about – the NotifyMessageID) I then act on it. In my case I use the bus to tell IMPF that there is a new message.

protected override void WndProc(ref Message message)

{

if (message.Msg == WinMessaging.NotifyMessageID)

{

this.bus.Broadcast(DataAction.CheckIPMF);

}

base.WndProc(ref message);

}

This has enabled IMPF to only act when needed, removed a thread (since it no longer needs it’s own thread), simplified the IMPF code and made Pull a better citizen on your machine. South African ID Number Checker in Excel version 2

18 February 2016: Fixed a bug in the multiple checks with the date display. Thanks to John Sole for pointing it out.

8 August 2014 - Just a quick note that the spreadsheet has been updated with better checking if the date is valid (including leap years), plus has been cleaned up a lot and finally will show you both years if we can not be certain which century the person was born in. Tested with Excel 2013 - your mileage may vary on other versions.

A long time ago I built a simple Excel spread sheet which worked out if an ID number was valid or not. Since I released it, I have received a lot of feedback about the spreadsheet. Most of the feedback was about how it worked, but a week ago Riaan contacted me and pointed out a bug in it so I took this as an opportunity to rebuild it.

Not only does the new version check the validity of the ID number, but it also tells you where the person was born, their gender and birth date.

Something else that I wanted to do was clean up the calculations. So now they have been moved to their own (hidden) tab and are documented.

For those who need to do a bulk checking, the second sheet of the Excel spreadsheet contains the ability to check multiple ID numbers.

I want to extend a massive thanks to Riaan Pretorius, not only for pointing out the bug but also running the new version through its paces and finding some issues in it. The fact this one is much better is owed to him, I just typed the code ![]()

You can download the Excel file below!

Next time on Information Worker

If you follow the IW website you may have seen that the September community meeting in Jo’burg would be about SharePoint 2010 Deployments. That has changed to something far more exciting: Double Demo Day!

Double Demo Day means we get to see two members of the community do a demo of something very interesting.The demos are:

Creating Workflows with SharePoint Designer 2010, InfoPath and Visio

Creating workflows with Visio 2010 and SharePoint Designer 2010 has never been easier. In this session I’ll go through the process of rapidly creating and deploying workflows in a SharePoint 2010 environment.

This will be presented by Ridwan Sassman, who helped us out last month with video taping the session.

Branding SharePoint 2010 with MasterPages, Layouts and CSS

One of the largest limitations of WSS3.0 and MOSS2007 is the ability to brand SharePoint without intricate knowledge of the platform and in some cases breaking a few rules and modifying out of the box system files to get the desired look and feel. Come and see how the theming engine in SharePoint 2010 together with CSS, Master Pages and Layouts can be used to brand your SharePoint site using the amazing new SharePoint Designer 2010.

This will be presented by Brent Samodien. If you have been to a SLAB’s you will know Brent as he helps us with the venue!

October

Looking ahead to October – there is no Jo’burg community meeting. Why? ‘cause we will all be at Tech·Ed Africa 2010! If you haven’t registered then you must do so NOW! Or you could try and win a free entry!