When the Tech·Ed craving has you?

BB&D is once again sponsoring the community lounge at Microsoft Tech·Ed Africa 2010! This year there is some fantastic prizes to be won in the community lounge thanks to BB&D.

BB&D is once again sponsoring the community lounge at Microsoft Tech·Ed Africa 2010! This year there is some fantastic prizes to be won in the community lounge thanks to BB&D.

The big prize is a Microsoft Xbox 360, but there is also some smaller prizes available. The one I am most excited about is the Flying Screaming Monkey who looks a like a cross between Zoro and the monkey from the Chicken Licken adverts.

What makes him really cool is his arms are springs, so you can stretch him like a slingshot and when you let him go he flies across the open plan office inflicting monkey love on any unsuspecting co-worker.

To even the odds though, he screams the entire flight (and if he screams again if he hits the ground hard enough), so your co-workers do have some time to duck out of the monkeys flight path!

If you are keen to win a monkey of your own, a Xbox or any other prize head over to the community lounge at Tech·Ed!

StackExchange Flair

For a while the flair on my site has included my stats from StackOverflow, ServerFault and SuperUser. In my article on it, I mentioned I used the iFrame but I stopped that a few months ago and switched to getting the JSON data for my accounts directly and parsing that. I did this as it was order of magnitudes faster than loading via the iFrame.

For those who attended my DevDays talks, they would recognise that code as it is the same as I used in my demos.

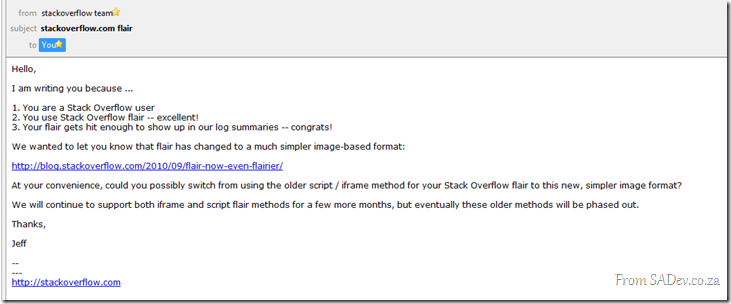

Then recently an email arrived:

Damn, my jQuery magic was about to end so what could I do but change? When I started looking at the new flair I noted that StackOverflow wanted me to hotlink the image, i.e. have my visitors get it from their server, but the performance for pulling the image was still poor compared to my own website (or so the Firebug tool says). So what could I do to improve this?

What I did was to use wget, which is a Linux tool (I’m hosted on a Linux box) for downloading files, and put that in a schedule to once a day download my StackExchange flair and store it on my website, which means it gets served faster. As my numbers won’t change heavily day to day, (I’m not Skeet) once a day is enough balance between keeping it fresh and making it cachable.

The only downside is that my flair uage stats on StackExchange will likely drop, but I don’t really care about that.

The wget command is:

wget http://stackexchange.com/users/flair/1c5ab06b9a844e49b817e7eeb31977e0.png –O <path>/files/stackexchange.png

Poken @ Tech·Ed 2010

![image[8] image[8]](https://www.sadev.co.za/files/image8_thumb.png) We live in an increasing digital and disconnected world where we seldom meet face to face, and in less than a week that will all change because it will be time for Tech·Ed Africa 2010! Last year there was a new piece of hardware unveiled, the Poken – which I can assure you will be even bigger this year.

We live in an increasing digital and disconnected world where we seldom meet face to face, and in less than a week that will all change because it will be time for Tech·Ed Africa 2010! Last year there was a new piece of hardware unveiled, the Poken – which I can assure you will be even bigger this year.

For those who do not know what a Poken is, it’s a small device which links your digital persona to a physical world! Or put more simply it’s a way to share details about who you are with other people, think like a digital business card.

From Ruari Plints post on these guys, here is what you need to do:

- Pop onto Poken website to find out all about it and why we going crazy “Poken-ing” everyone this year.

- Sign up foryou own Poken account.

- Get your Poken device, before or at TechEd Africa.

- Join us and go “Poken” you friends, and then some.

- Create your own “Poken” language and make us all laugh at the possibilities.

- Pop on to the TechEd Social media sites and start expressing yourself.

I have it on good authority that the Poken’s on sale at the event will be at a special price and plenty will be available to WIN!

SharePint @ Tech·Ed 2010!

After a full day of learning new and interesting SharePoint tips, tricks and ideas, come and hang out with fellow SharePoint enthusiasts to help digest your new knowledge and a pint or two of anything else you like.

Hosted by The MOSS Show, with with some prizes to give away, it should be a fun evening for all.

RSVP essential as we need to finalise venue bookings!

Date: Monday, 18 Oct.

Venue: Cubana Durban, 128 Florida Road

Time: 7:00 p.m.

Dress: Collared shirts & smart shoes for gents

Map & Directions from the CCI: View Map

Click to Register Here

A special thanks to our Sponsors:

Tech·Ed Africa 2010 Twitter List

Looking for Tech·Ed Africa 2010 speakers and key accounts, like Xbox South Africa, on Twitter? The solution a Twitter list I create where you can find everyone in one place to follow individually or you can just follow the whole list.

List: @rmaclean/teched-africa

Being a list it is not filtered on hash tags and so on, which means you will likely get off topic discussion too.

If I am missing anyone, please let me know via the site or Twitter.

Tech·Ed Africa 2010 Calendar is AWESOME!

If you have registered for Tech·Ed Africa 2010, there is a feature that you just have to use – the calendar. I know calendars sound boring but this one is not! To use it login to your Tech·Ed Africa 2010 account (My Tech·Ed –> Login)

If you have registered for Tech·Ed Africa 2010, there is a feature that you just have to use – the calendar. I know calendars sound boring but this one is not! To use it login to your Tech·Ed Africa 2010 account (My Tech·Ed –> Login)  then click on Calendar –> My Event Calendar and you can click on each time slot and scroll through a list of content available at that timeslot.

then click on Calendar –> My Event Calendar and you can click on each time slot and scroll through a list of content available at that timeslot.

This has the benefit of helping focus you down to what is available and getting that planning out of the way but you may miss the BEST features it it. Once you have completed your planning you can use the small iCal and printer buttons at the top: ![]()

Print does exactly what you think – a clean print view:

But the iCal is FANTASTIC – it provides you a file to bring into Outlook which lists all your sessions in your calendar so you can easily sync to your device – but more than that ALL the details is listed in the body of the invites so when you arrive at the event you can quickly recall what the session is about!

Internet Explorer 9 breaks with localhost

There is a known bug for this 601047This is resolved with RTM!

You can hear Eric Lawrence talk about this bug in the Herding Code Podcast

Internet Explorer 9 works great, except when it doesn’t, and it seems to not work for developers more than most, or maybe it’s just me (could the IE9 team be targeting me?).

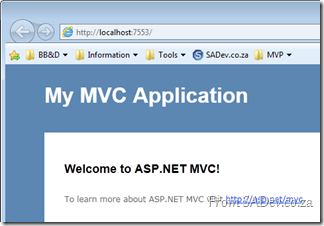

Paranoia aside, there is an issue where when testing web applications (ASP.NET, MVC) or Silverlight applications from Visual Studio (i.e. press F5) it just refuses to load. Thankfully this has been confirmed by other people ![]()

What is going on and how do we solve this? Because it is really frustrating and it also makes for bad demos (especially with TechEd around the corner).

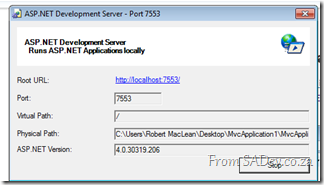

The first part of the problem is the ASP.NET Development Server which is what is hosting your websites when you hit F5.

Next part of the problem is Windows, especially since it assumes IPv6 is better than IPv4. Note in the picture below that when you ping localhost you get an IPv6 address.

So what appears to be happening is when IE9 tries to go to localhost it uses IPv6, and the ASP.NET Development Server is IPv4 only and so nothing loads and we get the error.

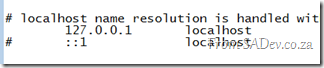

To solve this fire up notepad in administrator mode and navigate to <windows directory>\system32\drivers\etc\ and open the hosts file. Inside you will find a number of lines prefixed with a hash (which makes those lines comments). Remove the hash from the line which has 127.0.0.1 in it, as below and save.

This will cause Windows to resolve localhost to IPv4 first (you can confirm by pinging localhost) which means that IE9 will do the same and now it just works every time.

Redirected down a one way: Clearing the Internet Explorer host redirect cache

Internet Explorer 9 is fast, really, really fast! A lot of that speed comes from the massive caching improvements in IE9 – but this is a bit of a double edge sword, especially for developers when caching gets in the way of what is actually happening. I spent two hours debugging an odd caching issue recently and this is the sad story.

For some testing I needed to setup a redirect, in this case a 301 permanent redirect (handy HTTP status codes cheat sheet in case you don’t remember these). What this would do is enable me to have site alpha (http://localhost:5000/Demo) redirect to site beta (http://localhost:9000/Demo).

Prior to this the scenario look like this:

Behind the two browser windows is the IE 9 Developer tools and their fantastic new network capture feature. You can easily see that when I hit site alpha I got a 200 result, meaning all good and it loaded.

Once I setup the redirect, you’ll see I get a 304 this is because the data is already cached. Note that even though I typed in the site one URL it immediately loaded site two. This is because the browser had cached the redirect and so skipped the network steps for performance.

Now the problem, I wanted to turn off the redirect – however the browser cached it and so would ignore the change. Clearing cache, deleting files, rebooting and even using the IE reset option did nothing to solve this ![]()

The only way to fix it was to fire up the fantastic Fiddler tool and use it’s Clear Cache option with the option to delete persistent cookies, which flushes the WinINET cache.

Considering that this is supposedly the same as clearing the IE cache I have no idea why this works and IE cache clearing doesn’t but it does work.

Have you got the right stuff?

Tech·Ed Africa is a few weeks away and Microsoft have announced an amazing event which will run at the event – Dev Idol!

Tech·Ed Africa is a few weeks away and Microsoft have announced an amazing event which will run at the event – Dev Idol!

It is really easy, you go to Tech·Ed and at a specified time you get up and present a short presentation of your choosing – and if it is the best you win, and you win BIG!

The prize for the winner is a speaker slot at Tech·Ed Africa 2011 (this is a big deal trust me) and an XBox 360! Two runner ups get free entry to Tech·Ed Africa 2011.

Before you throw your hands up and say, “What’s the point?! Tech·Ed Africa 2010 already has great presenters who will easily win it!”, well current presenters are not allowed to enter, this is only open to attendees! However you have to hurry as you only have until the 13th October to get your entries in.

Get all the details you will need at http://blogs.msdn.com/b/southafrica/archive/2010/09/29/dev-idol-comes-to-tech-183-ed.aspx

Tech·Ed Africa - How to find the gems in the sessions?

Tech·Ed is around the corner and if you have seen the session catalogue, you will see there is

Tech·Ed is around the corner and if you have seen the session catalogue, you will see there is 267 295 sessions available for you to attend! How are you supposed to know what sessions are quality that you should attend?

Disclaimer: Rights Management Server is a great product for certain situations and I am picking on it in this post as an example more than anything else.

Know Yourself

The first thing to get right is to know yourself – if you have just started writing C# code, attending an advanced session on the internal workings of LINQ may be a waste of time as you may not be able to keep up to speed.

Knowing yourself is not just about knowing your skill level, but also knowing what is important to you – if you have no plans on using Rights Management Server (RMS) don’t attend the sessions on it, because you will miss out on other great sessions that may bring you a value, however this does not mean you should only attend sessions for technology you know and work on.

Identify Trends

Part of the benefit of the conference is exposure to items which you may not get the time to see during your normal day, so you may be tempted to go to that RMS session because you do not know about it but my suggestion to this is that when you are looking for sessions on topics you do not know about, you should look at where the hot trends are (they aren’t in RMS). A great way to see what the hot trends are is to to look at what community and knowledge sharing sites, like Channel 9, is talking about.

Part of the benefit of the conference is exposure to items which you may not get the time to see during your normal day, so you may be tempted to go to that RMS session because you do not know about it but my suggestion to this is that when you are looking for sessions on topics you do not know about, you should look at where the hot trends are (they aren’t in RMS). A great way to see what the hot trends are is to to look at what community and knowledge sharing sites, like Channel 9, is talking about.

The reason I suggest new trends over other items is this that the new trends is where the cutting edge technology and learning is and so there is often not a lot of content available on that topic, compared to say RMS where it is well documented and training is easy to get hold of.

Decoding Sessions

Every session at Tech·Ed has a code, and this code has some key information that will mean you get to the right sessions easily. If I look at one of the sessions I am presenting, the code associated is APS309, but what does that mean?

- APS – This is the track, or the high level concept that the session is part of. APS in this case refers to Application Server. Microsoft has a great guide to all these TLA (three letter acronyms) on the technical track page. The only item missing from there is WTB, which stands for Whiteboard which I will cover next.

- 3 – This digit is key, it identifies the level of the session and is between 1 and 4.

- 1 indicates a introduction session - where you can come in with zero knowledge on the topic. Expect it not to be deep, expect the pace to be slow and expect it to cover the concepts.

- 2 indicates a beginner session - you should’ve seen something on it before arriving. Expect it to cover usage scenarios and the pace and depth to be increased.

- 3 indicates a technical session – you should be working with the technology. These often go fast and deep or explore a new area in that space.

- 4 indicates a deep dive – you should expect a session that is for the most advanced of people.

- 09 – This is a unique identifier.

The next thing about understanding is to read the abstract for the topic, this is the overall plan for the session. So if we take my session again, the title is: Intro to Workflow Services and Windows Server AppFabric however if you read the abstract you will note that it mentions Workflow Foundation (WF) first and talks about developers using it. Then it mentions WF and usage with Windows Communication Foundation (WCF) and how they integrate in .NET 4. Finally it mentions AppFabric and hosting.

This tells you a lot of my plan for the session, I am going to talk to developers first about WF and then WCF. Finally I will bring in more technical topic of hosting these in AppFabric. This does not come across in the title, which is why the abstract is important to read and read carefully.

Session Types

There are two types of sessions breakouts and whiteboards. A breakout is a formal presentation where normally one person presents a topic with demo’s. A whiteboard is far less formal and often includes panel discussions – here you will find the topics often change based on the questions and discussions with the audience.

I have personally found when I need to learn a technology I head to a break out, but if I know the topic then the whiteboards give senior developers much better value.

Networking

Tech·Ed is first about getting a few thousand passionate people together which means you have the option to network with experts and make great contacts. Most presenters will take time for questions, but if not, most will welcome you coming up to them afterwards.

In addition to this there is also two special options for networking:

- Community Lounge – Community leaders are some of the smarted and most passionate people I know and the community lounge provides a great place to relax and talk to them.

- Ask the Experts – This is a special event where experts make themselves specially available to take questions and have one on one (and sometimes one on many) discussions. This is a great chance to get contacts so that when you run into a problem you have a lifeline.

Get Started Now

Don’t wait until you arrive at Tech·Ed to start thinking about sessions, start thinking now about the sessions you want to go to and digging into what trends and speakers you should be finding.

A great tip for corporates is something we at BBD do each year for the 30 or so people we send – a pre event get together. Here the people who have been before share some guidance and what to expect about the event with those who have never been and we all talk about the sessions and speakers we are excited about to help those who do not have the time to do deep research find some gems.

Lastly, for those who tweet, start following the Twitter conversation for the event! There is an official account @teched_africa and an officially long hashtag #TechEdAfrica. To really impress people you can combine them both into a single Twitter Search @TechEdAfrica OR #TechEdAfrica!

Update 8 Oct 2010: I presented a short session based on this post to the staff at BB&D which you can find below: