TIL: Tax is a asymptote

Tax is complicated, and we don't even have lunatic tariffs to worry about.

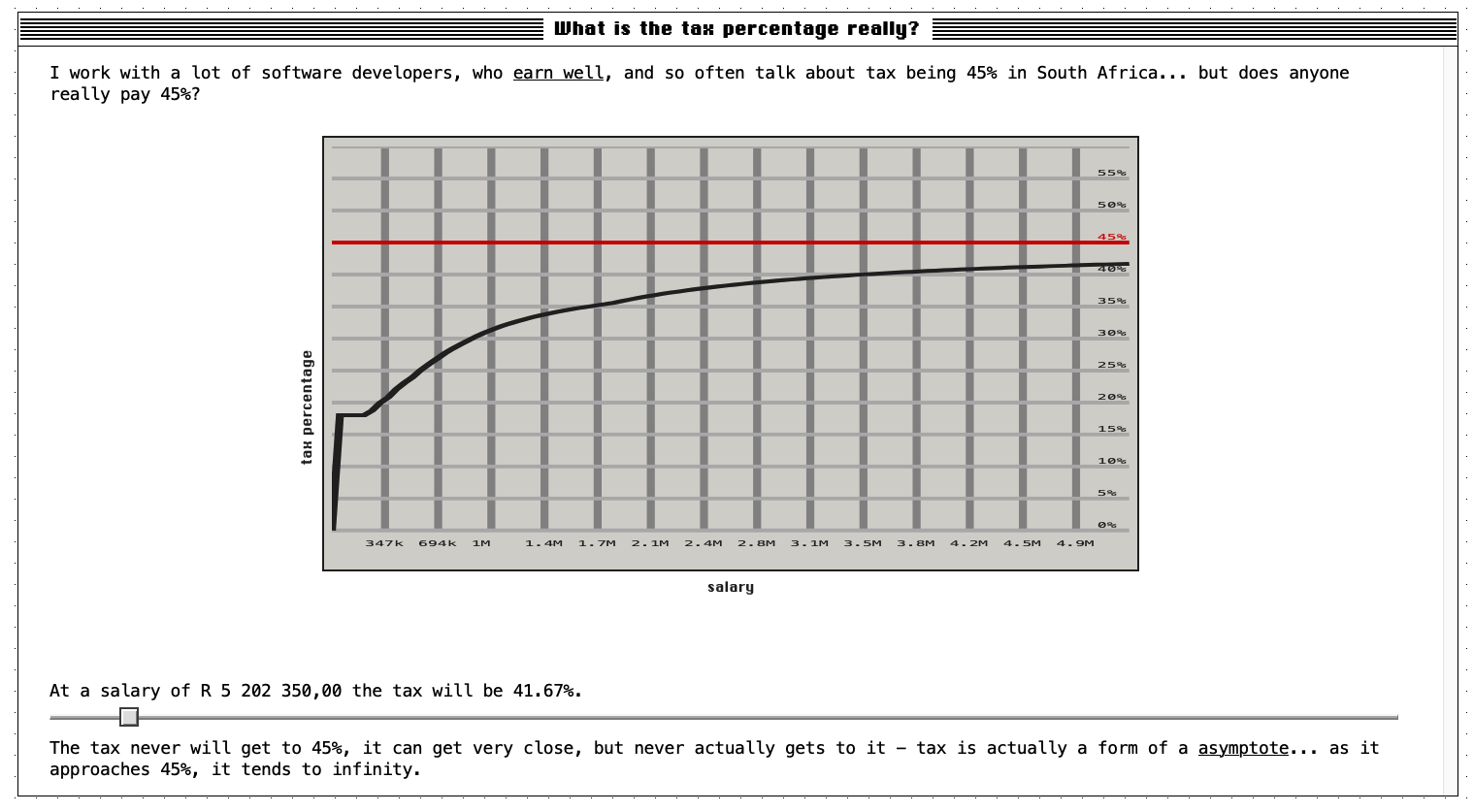

Often people talk about the fact we pay up to 45% tax in South Africa, but that is seldom true; in fact, mathematically it will never be true because tax is something called an asymptote. Even if you round up, you have to earn over R32.1 million a month, to get to 45% - something no-one (or very few people) will get close to.

I wanted to see and play with this, so I built taxreality.sadev.co.za this evening to play with the formula and visualise it.

Like with my last project, it is built with Deno, Fresh and Deploy. In addition, I also made use of System.css for some of the styling and RealFaviconGenerator which is an amazing tool; it let me use a SVG and have it change to work with light and dark mode!

A holiday project with Deno 🦕, Fresh 🍋 , Deploy and Formula 1 🏎️

The project is up at: https://f1teams.sadev.co.za/

Yesterday was a public/bank holiday in South Africa, and that it gave me a chance to try to build something green fields in a day... but I also wanted to push some skills by doing some more work with Deno. Initially thought about building with Next, but I use that often... so thought I would try Fresh (the framework from Deno) and that naturally led to using Deploy, the hosting that Deno also offers.

But what to build? How about a simple website which shows the evolution of Formula 1 teams in time for the Grand Prix this weekend, so I can see what each team was called over time.

Building with Deno, was, as always, painless - native TypeScript support and the full tool chain was great. Only issue I had was that I want trailing commas on my JSONC data... but the formatter won't let that be (so very minor). Fresh was uneventful... honestly, if you know Next, getting up and running takes so little extra time and the structuring makes so much sense. Deploy was an absolute highlight though - an amazingly easy to deploy from GitHub service with very generous free tier. I am now thinking of how many ways I can make use of that.

From start to finish, it took about a half day... and this is using new technologies - this is an amazing stack for quick and professional development.

Two other different aspects I used:

-

When I started I hadn't planned on using Deploy, which has a free KV available and likely if I wouldn't use that if I had planned to use Deploy... but since I didn't I went with a static JSONC file and parsed it with JSONC-Parser. I absolutely love JSONC more than pure JSON... and the sooner we all move to it, the better.

-

I used no component library.... everything is "handcrafted" HTML and CSS. Not even something like SASS... I still think there is a use for component libraries in bigger systems, but modern HTML & CSS is so powerful that it is just wonderful to keep the size down (the whole website is 100Kb)

The more things change... the more they stay the same

DevFest 2024 - Slides and info about my CLI

Today I presented at DevFest which was a great experience! A bunch of people wanted my slides/notes from my talk so they are available below.

Today I presented at DevFest which was a great experience! A bunch of people wanted my slides/notes from my talk so they are available below.

CLI Stack

Multiple people also asked about my CLI experience and what it is since it seems so powerful. My CLI stack is as follows:

- The terminal is Warp - I have written about it on my Newsletter

- The shell I use remains Fish which is easily the best switch if you coming to more shells from Windows, which is how I got to it, and after almost a decade... I still love it

- The prompt I use Starship. Warp has a lot of options but Starship can respond to your machine, and the folder to give even more power. Plus mine has the Pansexual Flag colours, which brings me so much joy.

- I make use of Fzy for CD autocompletion

14 things you need to be a successful software developer - Number 5 - Software development is not about coding

This is the first in the second act of the trio of sections that this series has, and it focuses on what it is like to be a software developer. When I first wrote this, I did it for a university talk and thought the advice on what it is really like to be a software developer might help the students understand what they will find and get successful faster. Since I have been consulting and speaking to many people, I have realised that there are developers at every level who do not know these things and some, especially very talented and senior, feel they are there to sit alone and churn out "perfect code"... and they are wrong.

If I were to distil what software development is about to a word, it is tradeoffs. We are always making tradeoffs, and in terms of what those trade-offs are, it is just 3 things: Capacity, Time and Features. Very simply, if you want to change one - you need to change at least one more of them and sometimes two others.

I have always liked visualising this as a semi-rigid triangle with each being a side of the triangle.

Time

The time aspect is probably the easiest to think about; it is how long it will take. This can be viewed as the whole project, but also can be viewed down to individual tasks.

Capacity

This used to be called People and Resources but we should always remember that People are not resources; and when we think of this as People or Resources we miss the nuance of this.

When working with this triangle and talking about the costs of a project, capacity often aligns nicely with people, so if you want to lower the costs this is an obvious one, that means you would need to increase time (i.e. how long it will take) or decrease features. Capacity though is not just about costs; for example, if you have a single developer you might feel you can move capacity... but it can be changed.

Are you context-switching a lot? That has an impact on capacity. Are they doing a lot of maintenance work? again, impacts capacity. Even the modern view that scrum has failed, is in part a realisation that the cost of scrum is directly on the capacity side without a greater or even equal impact on the time side.

Even how psychologically safe your environment impacts this because a higher-stress environment lowers the capacity for your people to deliver.

Features

Finally is features, this is not only what the software does but how it does it. Do we build a feature in a way that meets the needs now or also ones that we foresee coming in a feature months? Those are effectively two different sizes of the same thing; i.e. features is not one-to-one to the stories or epics in your backlog.

Features also incorporate quality, observability, and non-functional requirements (FYI Donald Graham's DevConf talk is a must-watch on non-functional requirements... so checkout the DevConf YouTube for when it comes out. A feature you create with shitty code versus great code has different sizes here.

Tradeoff

Now that we have the triangle and we understand the trade-off, it comes back to our initial rule that our job is not about coding. Yes, the triangle has aspects of coding but our goal is to ship a solution and to do that successfully, it is the identify what will impact the trade-offs, and find solutions to that. If you can do that, you will be way more of a successful developer and, importantly, the code you do write will be code that matters... not just a bunch of electrons sitting idle on a disk.

What can you control about this?

-

Avoid the "it's not my job" view, the goal is shipping software which is used. That is a team sport and if you can help achieve that but doing something outside your comfort zone, it is worth doing.

-

Finally, while remembering the triangle - also remember Hofstadter's Law: It always takes longer than you expect, even when you take into account Hofstadter's Law.

Escape Conf

.

.

Last week I presented a session at Escape Conf on what is new and awesome in JavaScript development! It will be up on YouTube in the near future but for now, if you looking for the slides, notes or anything... you can grab it below!

14 things you need to be a successful software developer - Number 4 - Software systems are alive

The more I work with startups, the more I realise how important this rule is - it does define the difference between success and failure; very simply your software is alive and it is your job as a software developer to keep it alive.

This starts with your DevOps strategy, and investing into that. Having a build provides a heartbeat for the project and when a commit breaks the build... it is like a flat-lined heart. The code in your repo should always be ready to ship; that heartbeat is the sign that it is ok. The benefit of this is that it is the first step to continuous deployment which is the ideal goal.

Once you have software running, it is still your responsibility to keep it alive. Stats show that 80% of the software development cost is accrued while maintaining the software. This blows my mind if something costs R100 000 to build... it will cost R400 000 to maintain! So everything you can do to lower maintenance costs has MASSIVE benefits in terms of cost savings.

As such, I truly believe we need to change the view that software developers are builders and architects, and rather realise we are gardeners or farmers. We prep the fields, plant, collect data on the growth, adjust the fertilisers and eventually harvest... but that is not the end, we need to prepare the fields for the next season. The model matches how we work so well, and more importantly, reminds us of our responsibility to living systems.

So what can you do in your own life and team to improve this?

-

Ship early, ship often, and get feedback: The shorter your cycles of getting content out to your customers and users, the faster you will understand what works and what does not. We often think of this in the realm of shipping versions or deployments, but I have found that even testing benefits from this. Leaving testing to the end of a project is a way to slow it down. Start UAT in your second week and you'll find you will ship faster.

-

Focusing on maintenance is likely the best way to lower costs on a project. Anything you can do, from using services from your cloud provider to get better logs, more observability, and tools to help you debug and admin the system is going to help.

-

Finally, the more risky a thing is - the more often you should do it. We solve risk, not by red tape but by doing it more and solving the issues which come up.

14 things you need to be a successful software developer - Number 3 - No-one is best positioned to avoid a problem than those who just made it

In 2017 I was working at AWS, and AWS went down... and went down hard. It was a tough night to be an engineer and when the dust settled hours later, it turned out that it was caused by a typo. The amount of money lost by AWS and more importantly their customers that day was huge, and one person was to blame.

What would you do if you were Andy Jassy (CEO of AWS at the time) with that employee? Fire them? Demote them? No... nothing like that! That engineer was first supported, and then they led the work in their team to ensure it never happened again. Their work then rolled out to the rest of the organisation over the next few months. Ultimately the entire organisation improved from that.

This absolutely speaks to building a safe organisation for making mistakes, which leads to success. There is more coming on how to build safety in teams coming in the series, so I won't cover those.

Rather what I want to share today is about how to build knowledge, and unfortunately, it is a work to build knowledge. I worked with a tech lead who told me "failing is the best way to learn"... and he was entirely wrong. Failure is not learning, failing is failing... so how do we build knowledge from failure or anything other situation? Obviously, the safety of the environment matters, but it is also taking time to step back, with diverse views and discuss it, and find the learnings.

What practical tools do we have to help with this?

- Do your sprint retros!

- Norm Kerth wonderful retrospective prime directive is something to be said every time you take time to review a sprint or every time you review an incident.

- When you have an incident, take time to do a blameless correction of error (or post-incident review or post-mortem). The best I've ever seen is the AWS model. That article is so detailed and covers an amazing tool which you can use not just in a CoE, but in many situations, and that is the 5 Whys

In short, failing in a safe environment where learning is an action and not just a statement on a company's values can lead to powerful improvements and you can help that by building blameless cultures and ensuring that learning happens!

Thanks for DevConf 2024

The 9th/8th/7th/6th year of DevConf, depending on how you count it... and what an amazing year! There was a strong demand that it should be called DuckConf going forward 😂 I can't commit to that, but I think Ms. Duckworth will be back in some way for 2025.

What worked?

Feedback via the ratings is still coming in, but looking at social media many, many, many, many, many, many people had an amazing time.

For me, something which was at one point not happening cause of budget concerns, turned out to be amazing and that was badge hacking. It was wonderful to see people with ribbons or art or other stickers on the badges.

Thanks

DevConf is the combined work of many people, first and foremost, my partner in all things DevConf, Candice Mesk. I could not do this without her; her hard work is so much of this event.

Working hand in hand with us, are Michelle, Judith, Tanya, Celeste and the rest of the team from Fizz Marketing. They have handled the logistical side and allowed Candice and me to do this while having full-time jobs for years. This event is a success thanks to them.

Joining us for the first time this year as DevConf staff, was Marié, who handled all the social media. This is a massively exhausting job to do on the days, and I am grateful to Marie for all she did this year!

DevConf costs a fortune to run and ticket prices are kept as low as possible thanks to the sponsors! This event would not be possible without them... or it would have R10k tickets and no one would come... so the same thing.

Last but not least are the speakers themselves, they give up months to prep and plan talks and I am honoured they chose to do all that work and spend all that energy in exchange for a jacket and some dinners.

How to count DevConf years?

- It is the 9th calendar year, as it was started in 2015

- We have run it 8 times in Gauteng which (since I am in Cape Town) includes Joburg

- We have run it 7 times in Joburg proper, as in 2023 we ran it in Pretoria

- We have run it 6 times in Cape Town.

Tech That Rocks: Warp (Edition 2)

This week on the Tech That Rocks newsletter, I share about Warp! It is an amazing terminal that is a major productivity boost for any one! The edition will take you 2 minutes to read and save you hours in the future, so check it out now