WinImage - Tidbits from the field

I have posted before about the great tool called WinImage and how I think it is brilliant! I have been using it on and off recently and decided to share some tidbits of information about it.

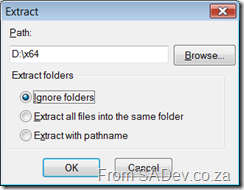

First is a minor annoyance when extracting from an image, the default option is set to Ignore Folders. This means that the content of the folder you selected gets extracted but no sub-folders. If you want to extract everything from your folder down you must select Extract with pathname. This should be the default option since it’s the one I use most of all, and I suspect most people will use. What is nice is that it does remember what you last used, but still that first time it is annoying cause you will forget.

There is a plus on the extracting of files and that is the ability to Extract all files into the same folder. Which extracts all the files from the selected point down and any sub folders but does not create the sub folders. I haven’t ever needed it, but it’s nice to know there is an option there if I do need it.

The other cool feature when extracting is that an icon appears in the task tray which if you hover over it gives you a tool tip, which isn’t that cool. The cool part is the image grows based on how far it is to completed so you can glance at the icon and quickly see the progress without needing the tool tip. In the pictures below note the first icon growing:

Windows Azure

Reading Paul Thurrott’s review on Windows Azure he mentioned that it is part of the Azure Services Platform or ASP! ASP is already used (twice) in the Microsoft domain for ASP (classic) and ASP.NET, which the non developer community do not understand are different, but it is also a TLA (three letter acronym) for application service provider… which is what Azure aims to be, and if someone at Microsoft actually thought the latter combination th

Willy reviews my LINQ session

Yesterday was the day of my first full day training session, where I took a class of smart people through LINQ! It was MUCH more tiring than I thought it would be, both physically and mentally but felt it was a great session. I have yet to see my scores but I am hoping that it will be in the 90% from the verbal feedback I got. One of the smart people to attend was Willy who has gone and written a review on what was covered including source code! One nice thing is that the discussions brought up some more topics and that I have fine tuned the content last night so those coming to the next one (which still has a FREE community seat open, I think) will get a even more refined session!

SQL Reporting Services TR - Think you've seen it?

I did a 20min whirl wind tour of SRS 2008 at Dev4Devs (see here for the event info and here for the content), and I mentioned I will be running this again in November (see here). For those who did attend my session at Dev4Devs you may want to come and see this one, because I have 60min to fill. Even if I spoke like Kirk I couldn’t stretch 23 min (which is what I used) to 60 so I’ve added some really cool new content. The new content covers the designer and the WinForm and ASP.NET chart controls (yeah I know it’s not really SRS but it’s borderline and seriously how cool are they?!) and also actually get into where that web server went!

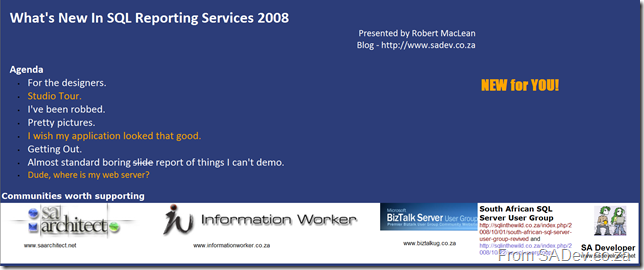

Here is the new introduction slide…

Hope to see you there!

Events in November 2008

Willy posted a short entry clarifying the S.A. Architect member attendance for events BB&D runs basically for TR we do not cap the limit on how many community members can attend but for a DRP we are capped to 1! He also explained what the difference is between a DR P and TR.

Anyway for November we kick off with three sessions from me! First is a TR on SSIS, then I have a repeat (due to demand and scheduling issues) of the LINQ session (this is a DRP but it means a community member can attend the first and someone else the second!) and then a TR on SQL Reporting Services. This last one will be a repeat of the content I presented at Dev4Devs (which you can read here). I will be going slower this time and also showing off the new config tool and the report builder tool (which I didn’t show before)! After that Herman takes over with his TR on the Expression Suite followed by Henk (who did a great DRP on Mono - .Net on LINUX! – today) doing a TR session XEN virtualisation. The last two for November include introduction TR on Regular Expressions and a TR on DataSets.

Blog Aggregator for SharePoint

The new S.A. Architect site has some dynamic content on the home page, namely the S.A. Architect Community Leads section and the S.A Architect Events section which both use this cool web part from Zlatan, which in a nutshell allows me to specify a number of RSS feeds and a tag to filter on and displays the content. This means that the community leads do not need to cross post or post twice when they produce content for the site. They simply need to make sure they have the right tag associated and the post will appear. This also means that content that is no relevant to SA Arch does not appear. Very cool stuff and big thanks for Zlatan for it.

What's new in SQL Reporting Services 2008

On Saturday I did a 20min (which is basically nothing) presentation on what is new in SRS 2008 at Dev4Devs! The feedback I have gotten has been very positive and I personally learnt more about what it takes for me to present well. That said some people asked me afterwards about the slides and content I used and the reality is that I didn’t have a single slide! The truth was Eben indicated when I volunteered that developers don’t like slides, so I took it as a challenge and did all my “slides” in SRS! For those who couldn’t attend, here is the run down on what I covered!

Introduction

The first “slide” was really about what I was covering and also some of the user groups out there! I must say sorry to Craig for leaving out www.sadeveloper.net. For those wanting the links to the groups they are:

- SA Architect: www.saarchitect.net

- Information Worker: www.informationworker.co.za

- BizTalk: www.biztalkug.co.za

- SA SQL Server: http://sqlinthewild.co.za/index.php/2008/10/01/south-african-sql-server-user-group-revived/

For the Designers

Building the slide also gave me the platform for the first section, what is new for designers! So switching to edit mode I was able to demo the fact that textboxes can now contain rich text, so the entire of the title and agenda was a single textbox with different font styles and positioning. Below that you can see the communities worth supporting with two different colours! In SQL 2005 you were limited to a single font configuration per textbox so to do the above in 2005 would have take 7 textboxes!

Next I showed off the new design surface improvements which make it no longer feel like a annoying grid but a real smooth surface. There is also enhancements like the guide lines which snap you to other design elements and the distance tooltips which show your distance from other elements (see below).

I then showed off my favorite feature, UNDO and REDO. I know it seems small but the UNDO in 2005 reloaded entire reports and took forever to do, now there is a real instance UNDO!

We’ve been Robbed

Next I went into what had been taken out… sort of ![]() First was the data tab (missing highlighted in red below) which has moved to it’s own window. It feels so much slicker there! And then I spoken about the fact Table, Matrix and List were gone and what the table, matrix and list tools are now! I won’t retell the story on that because Teo Lachev has a better description at his blog.

First was the data tab (missing highlighted in red below) which has moved to it’s own window. It feels so much slicker there! And then I spoken about the fact Table, Matrix and List were gone and what the table, matrix and list tools are now! I won’t retell the story on that because Teo Lachev has a better description at his blog.

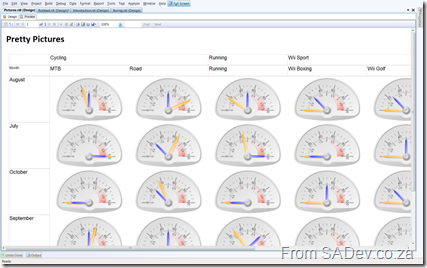

Pretty Pictures

From there I moved into showing off the new Dundas based charting and gauge controls which make your reports look super slick and demo'd why gauges are great for showing multiple pieces of data at the same time (in my demo the distance and time of my exercising):

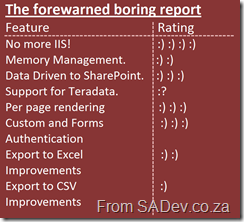

The Boring Slide

Lastly I ended with a “slide” on things I couldn’t demo but are noteworthy and I included a smiley rating scale on how noteworthy they are:

- No More IIS: SRS 2008 no longer requires IIS to run! It actually has it’s own web server and this means that not only does it scale better, it also is a true middle tier application.

- Memory Management: Because SRS 2008 is in charge of everything now and not needing IIS, you can limit how much RAM is used!

- Data Driven to SharePoint: You can now use a data driven subscription (i.e. one which is based on data in the DB) to publish to SharePoint.

- Support for Teradata: A lot has been said on this, so I assume it is important (I saw some heads nod when I spoke about it in my talk), but as I have never used Teradata it got the confused smiley.

- Per page rendering: This is big, it no longer renders the entire report at once. Now it just renders a page at time! Great for those massive reports.

- Custom and forms based authentication: A really great feature for hybrid environments! Also combined with no more IIS those Kerberos issues between CRM and SRS should be a thing of the past!

- Export to Excel: In my series on complex report building, the last part mentioned the horror that was exporting to excel and how sub reports generated ugly grey blocks! Well that is no longer the case. YEAH!

- Export to CSV: Has been improved to export just data. I did point out that there is limitations (like values from gauges will not be included if you use CSV) so be careful.

I lastly mentioned a new tool, called Report Builder which is an 18Mb download and gives an Office (ribbon bar) experience to building 2008 reports. It is really great in that it has low overheads (no Visual Studio requirements), it has all the design surface features I mentioned at the start and it is very easy to get up and running. It does require a full SRS server to be available if you want to run the report, so no preview mode like in SQL BI Studio. That said it is great for power users and I see the real value coming in the future when you need to work with old reports and don’t want to install old versions of Visual Studio, like we have to with 2003 and 2005! I mentioned on Saturday it was RC1 status, we’ll it seems that was a lie because at 3:30am Saturday they released the RTM version!!!! For more and to download it (it’s free) see: http://blogs.msdn.com/robertbruckner/archive/2008/10/17/report-builder-20-release.aspx

Finally thanks for Eben and Ahmed for arranging the event and EVERYONE who attended!

S.A. Architect - LINQ Drilldown

One of the perks of being a registered member of S.A. Architect is that when the ATC team at Barone Budge and Dominick run a training session we keep a seat open for a community member. Coming up at the end of this month is my first full day session *gulp* which aims to cover LINQ. Being the shameless self promoting type, I thought I would do a little blurb on what you can expect if you, said S.A. Architect member, decide to join us.

Remember attendance for this one member is FREE! First you come to our excellent collab centre where you will have your own PC to work on for the day (sorry, can’t take it home with you) and I will be taking you through 28 HANDS ON labs during the day! Most are small labs (sub < 15min) so don’t worry about leaving late. Mindful that you are here for the full day, you get a free hot lunch (always cool) and I promise to personally guide you to the vending machines (snacks and coke) which will be free too! You will also take home a printed copy of the manual created for this session. I am waiting for the final proof to be developed but I suspect it will be about 200 pages in length in the end! Next I will be asking questions during the day (to see who is sleeping) and for prizes there the stress balls. I am hoping we will have a few t-shirts left after Rhodes to give away to people answering questions too. Lastly you get to meet some very smart people and do some networking!

Before it sounds like this is super special for my session, most of this (lunch, snacks, prizes, networking) is available at most of the full day sessions we run!

This is a session for someone who knows nothing of LINQ and we will start at the basics and go through to some level 300 stuff in the end. You do need to know basic C# (if you know what I mean by saying: add the using System.Data.Linq, you will be fine). The day will cover:

- The Problem which LINQ solves

- LINQ Architecture

- Implicitly Typed Variables

- Use LINQ to Objects to get and sort data and understand what is happening using the basic LINQ program.

- Anonymous Types

- LINQ Query Execution

- Anonymous Methods and Lambda Expressions

- LINQ Query Operators

- LINQ to XML

- LINQ to SQL

So if you are interested, first register at S.A Architect. Then let Willy know you wish to attend! Dates/times etc… can be found here!

Dev4Devs - This Saturday

I also saw on Eben’s post that two of my absolutely favorite presenters are speaking, namely Rudi Grobler (he is speaking on what’s new in 3.5 SP 1 for client development) and Brent Samodien (he is speaking on consuming ASP.NET data services using AJAX… which I hope means he will show ADO.NET Data Services, will have to wait until Saturday to find out).

Rhodes students, you lucky bunch!

Willy posted about the upcoming trip to Rhodes (see here) which is really interesting. I am not going along for the road trip but I have had a chance to see what swag will be given away and let me state that those at Rhodes who are attending are going to be the envy of those who do not attend. Not only is there the standard flyers and free pens, but a great t-shirt (I got my hands on a development one! There is also three others… but that can be surprise) and a DVD with over 3.5Gb of content. I have been looking at the DVD content and there is not only full copies of the 3 books Willy has written on there but copies of all the content from S.A. Architect! Personally I rate that swag is as good as what you would find at a major event like Tech-Ed!