Enabling Flip 3d with Logitech Performance MX Mouse

I purchased a Logitech Performance MX Mouse (to replace my other Logitech V450 mouse) which has a number of fantastic features:

- Darkfield optical sensor which works on everything, including glass

- The awesome and tiny wireless pickup that can remain plugged in all the time.

- Ability to charge off of USB and still work!

- 7 Buttons

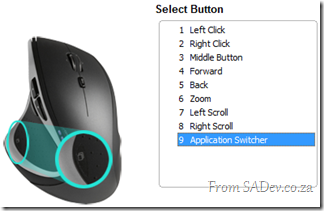

One of those 7 buttons defaults to a feature called Application Switcher. For Mac users this is exactly like Expose and for Windows users it is like a full screen task switcher.

One of those 7 buttons defaults to a feature called Application Switcher. For Mac users this is exactly like Expose and for Windows users it is like a full screen task switcher.

In fact on Mac runs Expose but on Windows this is a custom application and really doesn’t have the feel or the polish that the Flip 3d (the Windows+Tab thing) which ships with Windows Vista and Windows 7.

So how do you change this? There is many posts about using macro’s, assigning specific applications or even hacked drivers but from my own experience this is no longer needed and it appears most people do not know about it because it is so well hidden.

So how do you change this? There is many posts about using macro’s, assigning specific applications or even hacked drivers but from my own experience this is no longer needed and it appears most people do not know about it because it is so well hidden.

The first step is to download the latest version of SetPoint, the mouse software, from Logitech which at time of writing is 6.2. However the mouse actually ships with 4.7, a version over 3 years old! This is really odd since the mouse was only launched less than 1 year ago!

Once upgrade go to the Button Settings section, select the button and set the task to Other this will bring up a dialog with a massive drop down full of options. If you look in here for Flip 3d or maybe some alternative on application switcher you will not find it. However there is an option called Document Flip, which you guessed correctly is Flip 3d.

Once upgrade go to the Button Settings section, select the button and set the task to Other this will bring up a dialog with a massive drop down full of options. If you look in here for Flip 3d or maybe some alternative on application switcher you will not find it. However there is an option called Document Flip, which you guessed correctly is Flip 3d.

Set your mouse to this and viola!

Resolving the AppFabric: ErrorCode<ERRCA0017>:SubStatus<ES0006>

AppFabric Caching has one error which you will learn to hate:

ErrorCode<ERRCA0017>:SubStatus<ES0006>:There is a temporary failure. Please retry later. (One or more specified Cache servers are unavailable, which could be caused by busy network or servers. Ensure that security permission has been granted for this client account on the cluster and that the AppFabric Caching Service is allowed through the firewall on all cache hosts. Retry later.)

This message could mean a variety of different things, such as:

- Client vs. Server security is wrong

- Disposing the factory too soon

- Not enough physical memory

- Corruption of the cache

However for me none of those were the cause of my pain. My issues was:

Copy & Paste Stupidity

I copied and pasted the settings for my deployment and so I had the following config issue:

I copied and pasted the settings for my deployment and so I had the following config issue:

<dataCacheClient>

<!-- cache host(s) -->

<hosts>

<host name="cacheServer1" cachePort="22233"/>

</hosts>

</dataCacheClient>

However my server was DEMO-PC, so I needed to change that to the following:

<host name="DEMO-PC" cachePort="22233"/>

The only way I found this was to hit the event log and scroll down through the error message. About halfway down was the cause, as clear as day.

Reporting Services - Missing features in SRS 2008

I recently helped on an interesting problem with SQL Reporting Services, where features of SRS 2008 were just gone!

I recently helped on an interesting problem with SQL Reporting Services, where features of SRS 2008 were just gone!

History

The team had created a number of reports in SQL Server 2005 and used them successfully for a number of years. During their upgrade to SQL Server 2008 they needed to upgrade the reports to SRS 2008 format.

To be clear here, SRS doesn’t support backwards compatibility so if you want to run a report built in 2005 on a 2008 server, it must be upgraded. Thankfully this is a simple process, just open the report solution in Business Intelligence Development Studio (BIDS) 2008 and it will upgrade it.

The Problem

The team knew this process and opened the 2005 reports in BIDS 2008, no errors were reported, all reports saved correctly and were published to the SRS server. The server then rendered them, which to everyone meant that the reports had upgraded successfully.

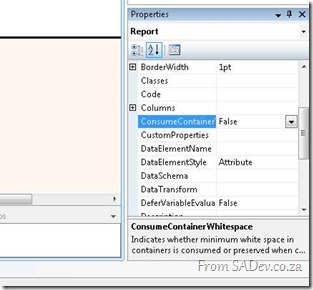

However there was some changes to how the reports were rendered and the team needed to change the ConsumeContainerWhitespace property on the report to true to fix that rendering issue.

This is a new property in 2008, and the problem the team had is that when the report was opened in BIDS 2008 the property just wasn’t there! They could create new reports and see it, but their existing reports did not contain it.

Diagnosis

My first thing to check was that they were opening the right version of BIDS – this is the SRS persons way of saying “Have you tried turning it off and on?”.

They verified that quickly, so my next step was to check if BIDS was actually doing the upgrade. A report is just an XML file so you can open it up in notepad and check the schema to verify what version of the report it is. If it is http://schemas.microsoft.com/sqlserver/reporting/2008/01/reportdefinition it is a 2008 report and if it is http://schemas.microsoft.com/sqlserver/reporting/2005/01/reportdefinition it is a 2005 report.

You know what, after the upgrade it was STILL showing as a 2005 report! This confused me to no end – how could SRS 2008 render a 2005 report and why was BIDS not upgrading it?

Solution

I was close to getting the team to call Microsoft, or an exorcist, for assistance – but re-reading the email conversation between the team and me a number of times I stumbled across the piece of missing information which explained the cause. The team was using .rdlc files – these are SRS files designed for client side rendering. SRS supports these and the “normal” .rdl files.

The interesting thing about .rdlc files is that they are frozen on 2005:

- So they do not get new features of 2008 or 2008 R2, which is why the new properties (like the one the team wanted) does not appear.

- The schema remains on 2005 (no upgrade in BIDS)

- and to confuse everyone, they still render on 2008 and 2008 R2!

Thankfully you can convert from .rdlc to .rdl, which means then the reports get upgraded to the 2008 or 2008 R2 formats and get all the features. The team did this and have been smiling ever since!

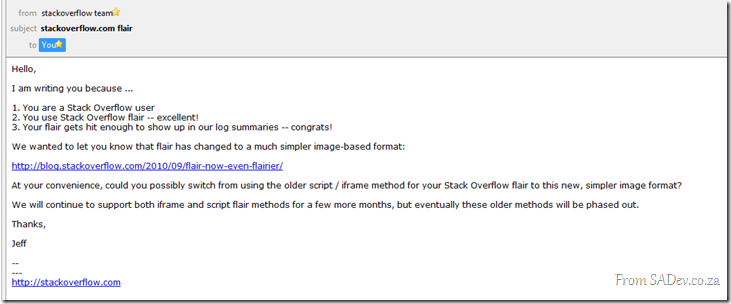

StackExchange Flair

For a while the flair on my site has included my stats from StackOverflow, ServerFault and SuperUser. In my article on it, I mentioned I used the iFrame but I stopped that a few months ago and switched to getting the JSON data for my accounts directly and parsing that. I did this as it was order of magnitudes faster than loading via the iFrame.

For those who attended my DevDays talks, they would recognise that code as it is the same as I used in my demos.

Then recently an email arrived:

Damn, my jQuery magic was about to end so what could I do but change? When I started looking at the new flair I noted that StackOverflow wanted me to hotlink the image, i.e. have my visitors get it from their server, but the performance for pulling the image was still poor compared to my own website (or so the Firebug tool says). So what could I do to improve this?

What I did was to use wget, which is a Linux tool (I’m hosted on a Linux box) for downloading files, and put that in a schedule to once a day download my StackExchange flair and store it on my website, which means it gets served faster. As my numbers won’t change heavily day to day, (I’m not Skeet) once a day is enough balance between keeping it fresh and making it cachable.

The only downside is that my flair uage stats on StackExchange will likely drop, but I don’t really care about that.

The wget command is:

wget http://stackexchange.com/users/flair/1c5ab06b9a844e49b817e7eeb31977e0.png –O <path>/files/stackexchange.png

Internet Explorer 9 breaks with localhost

There is a known bug for this 601047This is resolved with RTM!

You can hear Eric Lawrence talk about this bug in the Herding Code Podcast

Internet Explorer 9 works great, except when it doesn’t, and it seems to not work for developers more than most, or maybe it’s just me (could the IE9 team be targeting me?).

Paranoia aside, there is an issue where when testing web applications (ASP.NET, MVC) or Silverlight applications from Visual Studio (i.e. press F5) it just refuses to load. Thankfully this has been confirmed by other people ![]()

What is going on and how do we solve this? Because it is really frustrating and it also makes for bad demos (especially with TechEd around the corner).

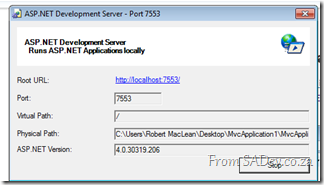

The first part of the problem is the ASP.NET Development Server which is what is hosting your websites when you hit F5.

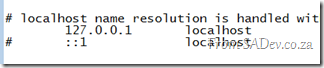

Next part of the problem is Windows, especially since it assumes IPv6 is better than IPv4. Note in the picture below that when you ping localhost you get an IPv6 address.

So what appears to be happening is when IE9 tries to go to localhost it uses IPv6, and the ASP.NET Development Server is IPv4 only and so nothing loads and we get the error.

To solve this fire up notepad in administrator mode and navigate to <windows directory>\system32\drivers\etc\ and open the hosts file. Inside you will find a number of lines prefixed with a hash (which makes those lines comments). Remove the hash from the line which has 127.0.0.1 in it, as below and save.

This will cause Windows to resolve localhost to IPv4 first (you can confirm by pinging localhost) which means that IE9 will do the same and now it just works every time.

Redirected down a one way: Clearing the Internet Explorer host redirect cache

Internet Explorer 9 is fast, really, really fast! A lot of that speed comes from the massive caching improvements in IE9 – but this is a bit of a double edge sword, especially for developers when caching gets in the way of what is actually happening. I spent two hours debugging an odd caching issue recently and this is the sad story.

For some testing I needed to setup a redirect, in this case a 301 permanent redirect (handy HTTP status codes cheat sheet in case you don’t remember these). What this would do is enable me to have site alpha (http://localhost:5000/Demo) redirect to site beta (http://localhost:9000/Demo).

Prior to this the scenario look like this:

Behind the two browser windows is the IE 9 Developer tools and their fantastic new network capture feature. You can easily see that when I hit site alpha I got a 200 result, meaning all good and it loaded.

Once I setup the redirect, you’ll see I get a 304 this is because the data is already cached. Note that even though I typed in the site one URL it immediately loaded site two. This is because the browser had cached the redirect and so skipped the network steps for performance.

Now the problem, I wanted to turn off the redirect – however the browser cached it and so would ignore the change. Clearing cache, deleting files, rebooting and even using the IE reset option did nothing to solve this ![]()

The only way to fix it was to fire up the fantastic Fiddler tool and use it’s Clear Cache option with the option to delete persistent cookies, which flushes the WinINET cache.

Considering that this is supposedly the same as clearing the IE cache I have no idea why this works and IE cache clearing doesn’t but it does work.

Cannot add a Service Reference to SharePoint 2010 OData!

SharePoint 2010 has a number of API’s (an API is a way we communicate with SharePoint), some we have had for a while like the web services but one is new – OData. What is OData?

The Open Data Protocol (OData) is a Webprotocol for querying and updating data that provides a way tounlock your data and free it from silos that exist in applicationstoday. OData does this by applying and building upon Webtechnologies such as HTTP, Atom PublishingProtocol (AtomPub) and JSON toprovide access to information from a variety of applications,services, and stores.

The main reason I like OData over the web services is that it is lightweight, works well in Visual Studio and works easily across platform, thanks to all the SDK’s.

SharePoint 2010 exposes these on the following URL http(s)://<site>/_vti_bin/listdata.svc and you can add this to Visual Studio to consume using the exact same as a web service to SharePoint, right click on the project and select Add Service Reference.

SharePoint 2010 exposes these on the following URL http(s)://<site>/_vti_bin/listdata.svc and you can add this to Visual Studio to consume using the exact same as a web service to SharePoint, right click on the project and select Add Service Reference.

Once loaded, each list is a contract and listed on the left and to add it to code, you just hit OK and start using it.

Add Service Reference Failed

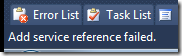

The procedure above works well, until it doesn’t and oddly enough my current work found a situation which one which caused the add reference to fail! The experience isn’t great when it does fail – the Add dialog closes and pops back up blank! Try it again and it disappears again but stays away.

The procedure above works well, until it doesn’t and oddly enough my current work found a situation which one which caused the add reference to fail! The experience isn’t great when it does fail – the Add dialog closes and pops back up blank! Try it again and it disappears again but stays away.

If you check the status bar in VS, you will see the error message indicating it has failed – but by this point you may see the service reference is listed there but no code works, because the adding failed.

If you check the status bar in VS, you will see the error message indicating it has failed – but by this point you may see the service reference is listed there but no code works, because the adding failed.

If you right click and say delete, it will also refuse to delete because the adding failed. The only way to get rid of it is to close Visual Studio, go to the service reference folder (<Solution Folder>\<Project Folder>\Service References) and delete the folder in there which matches the name of your service. You will now be able to launch Visual Studio again, and will be able to delete the service reference.

What went wrong?

Since we have no way to know what went wrong, we need to get a lot more low level. We start off by launching a web browser and going to the meta data URL for the service: http(s)://<site>/_vti_bin/listdata.svc/$metadata

Since we have no way to know what went wrong, we need to get a lot more low level. We start off by launching a web browser and going to the meta data URL for the service: http(s)://<site>/_vti_bin/listdata.svc/$metadata

In Internet Explorer 9 this just gives a useless blank page ![]() but if you use the right click menu option in IE 9, View Source, it will show you the XML in notepad. This XML is what Visual Studio is taking, trying to parse and failing on. For us to diagnose the cause we need to work with this XML, so save it to your machine and save it with a .csdl file extension. We need this special extension for the next tool we will use which refuses to work with files without it.

but if you use the right click menu option in IE 9, View Source, it will show you the XML in notepad. This XML is what Visual Studio is taking, trying to parse and failing on. For us to diagnose the cause we need to work with this XML, so save it to your machine and save it with a .csdl file extension. We need this special extension for the next tool we will use which refuses to work with files without it.

The next step is to open the Visual Studio Command Prompt and navigate to where you saved the CSDL file. We will use a command line tool called DataSvcUtil.exe. This may be familiar to WCF people who know SvcUtil.exe which is very similar, but this one is specifically for OData services. All it does is take the CSDL file and produce a code contract from it, the syntax is very easy: datasvcutil.exe /out:<file.cs> /in:<file.csdl>

The next step is to open the Visual Studio Command Prompt and navigate to where you saved the CSDL file. We will use a command line tool called DataSvcUtil.exe. This may be familiar to WCF people who know SvcUtil.exe which is very similar, but this one is specifically for OData services. All it does is take the CSDL file and produce a code contract from it, the syntax is very easy: datasvcutil.exe /out:<file.cs> /in:<file.csdl>

Immediately you will see a mass of red, and you know that red means error. In my case I have a list called 1 History which in the OData service is known by it’s gangster name _1History. This problem child is breaking my ability to generate code, which you can figure out by reading the errors.

Solving the problem!

Thankfully I do not need 1 History, so to fix this issue I need to clean up the CSDL file of _1History references. I switched to Visual Studio and loaded the CSDL file in it and begin to start removing all references to the troublemaker. I also needed to remove the item contract for the list which is __1HistoryItem. I start off by removing the item contract EntityType which is highlighted in the image along side.

Thankfully I do not need 1 History, so to fix this issue I need to clean up the CSDL file of _1History references. I switched to Visual Studio and loaded the CSDL file in it and begin to start removing all references to the troublemaker. I also needed to remove the item contract for the list which is __1HistoryItem. I start off by removing the item contract EntityType which is highlighted in the image along side.

The next cleanup step is to remove all the associations to __1HistoryItem.

![]() Finally the last item I need to remove is the EntitySet for the list:

Finally the last item I need to remove is the EntitySet for the list:

BREATH! RELAX!

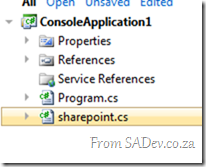

Ok, now the hard work is done and so I jump back to the command prompt and re-run the DataSvcUtil tool, and it now works:

This produces a file, in my case sharepoint.cs, which I am able to add that to my project just as any other class file and I am able to make use of OData in my solution just like it is supposed to work!

This produces a file, in my case sharepoint.cs, which I am able to add that to my project just as any other class file and I am able to make use of OData in my solution just like it is supposed to work!

Pulled Apart - Part XI: Talking to yourself is ok, but answering back is a problem. Why IMPF destroyed CPUS?

Note: This is part of a series, you can find the rest of the parts in the series index.

Pull for me is as much about learning as it is about writing a useful tool that others will enjoy and often I head down a path to learn it was wrong. Sometimes I realise before a commit and no one ever knows, other times it is committed and reading the source history is like an example of how bad things can get and sometimes I even ship bad ideas. IMPF is one such area where I shipped a huge mistake which caused Pull to easily consume an entire core of CPU for doing zero work.

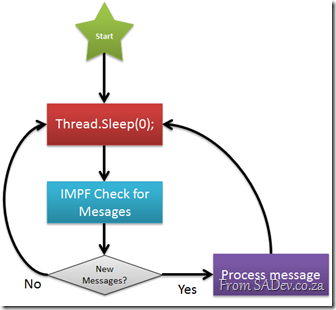

IMPF would check for messages as using the following process:

The Thread.Sleep(0) is there to ensure application messages get processed, but it is zero so that as soon as a message arrives it is processed. This meant that the check, which did a lot of work, was running almost constantly. This means that Pull ended up eating 100% of a the power of a core ![]()

The Solution

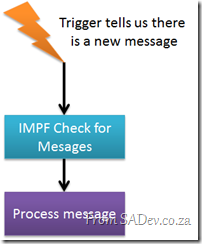

The solution to this was to change the process from constantly checking to getting notified when there is a new message.

This is also much simpler to draw than the other way, maybe that should be a design check, the harder to draw the less chance it works ![]()

The only issue is how do I cause that trigger to fire from another application when it writes a message IMPF should read?

Windows Messaging

Windows internally has a full message system which you can use to send messages to various components in Windows, for example to turn the screen saver on or off, or to send messages to applications. I have used this previously in Pull to tell Windows to add the shield icon if needed (see Part IX) to the protocol handler buttons.

I can also use it to ping an application with a custom message which that application can act on. For Pull when I get that ping I know there is a new IMPF message.

The first part of this is finding the window handle of the primary instance that I want to ping. This I do by consulting the processes running on the machine and using a dash of LINQ filter it to the primary instance.

private static IntPtr GetWindowHandleForPreviousInstances()

{

Process currentProcess = Process.GetCurrentProcess();

string processName = currentProcess.ProcessName;

List<Process> processes = new List<Process>(Process.GetProcessesByName(processName));

IEnumerable<Process> matchedProcesses = from p in processes

where (p.Id != currentProcess.Id) &&

(p.ProcessName == processName)

select p;

if (matchedProcesses.Any())

{

return matchedProcesses.First().MainWindowHandle;

}

return IntPtr.Zero;

}

Now I know who to ping, I just need to send a ping. This is done by calling the Win32 API SendNotifyMessage:

public static int NotifyMessageID = 93956;

private static class NativeMethods

{

[DllImport("user32.dll", SetLastError = true, CharSet = CharSet.Auto)]

[return:MarshalAs(UnmanagedType.Bool)]

public static extern bool SendNotifyMessage(IntPtr hWnd, int Msg, IntPtr wParam, IntPtr lParam);

}

public static void PingPreviousInstance()

{

IntPtr otherInstance = GetWindowHandleForPreviousInstances();

if (otherInstance != IntPtr.Zero)

{

NativeMethods.SendNotifyMessage(otherInstance, NotifyMessageID, IntPtr.Zero, IntPtr.Zero);

}

}

That takes care of sending, but how do I receive the ping? I need to do is override the WndProc method on my main form to check for the message and if I get the right ID (see line 1 about – the NotifyMessageID) I then act on it. In my case I use the bus to tell IMPF that there is a new message.

protected override void WndProc(ref Message message)

{

if (message.Msg == WinMessaging.NotifyMessageID)

{

this.bus.Broadcast(DataAction.CheckIPMF);

}

base.WndProc(ref message);

}

This has enabled IMPF to only act when needed, removed a thread (since it no longer needs it’s own thread), simplified the IMPF code and made Pull a better citizen on your machine. Visual Studio and/or Test Manager corrupt licensing?

At the Visual Studio & TFS event we had a few machines complaining that the Test Manager license was invalid and a new one was needed. Those same machines also said Visual Studio’s license was corrupted and that Visual Studio needed a re-install.

At the Visual Studio & TFS event we had a few machines complaining that the Test Manager license was invalid and a new one was needed. Those same machines also said Visual Studio’s license was corrupted and that Visual Studio needed a re-install.

To make this more odd, we were using virtual machines so every machine was identical yet only some machines had this problem.

The cause was the host OS date was wrong (the year was 2008) and so the virtual machines were set to 2008. In the eyes of the virtual machine this meant that the license was installed magically in the future.

We turned off the VM, deleted the state, fixed the date and started again and it was solved!

Resolving "Could not load type Microsoft.ApplicationServer.Caching.DataCacheSessionStoreProvider"

If you are using AppFabric and decide to swop out the ASP.NET standard caching with it you may run into the error:

Could not load type "Microsoft.ApplicationServer.Caching.DataCacheSessionStoreProvider”

The error will be pointing to the type of the custom session (line 5 below):

<sessionState mode="Custom" customProvider="AppFabricCacheSessionStoreProvider">

<providers>

<!-- specify the named cache for session data -->

<add name="AppFabricCacheSessionStoreProvider"

type="Microsoft.ApplicationServer.Caching.DataCacheSessionStoreProvider"

cacheName="TailSpin" sharedId="TailSpinTravelId"/>

</providers>

</sessionState>

The cause is that the application can’t find that class. To help it find it you need to add the following to the Configuration –> System.Web –> Compilation –> Assemblies:

<add assembly="Microsoft.ApplicationServer.Caching.Client, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/> <add assembly="Microsoft.ApplicationServer.Caching.Core, Version=1.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35"/>